Generative Modelling with Design Constraints – Reinforcement Learning for Furniture Generation (2019)

Generative design has been explored in architecture to produce unprecedented geometries, however, in most cases, design constraints are limited during the design process. Limitations of existing generative design strategies include topological inconsistencies of the output geometries, dense design outputs, as the output format is often voxels or point clouds, and finally out-of-scope design constraints. In order to overcome such shortcomings, a novel reinforcement learning (RL) framework is explored in order to design a series of furniture with embedded design and fabrication constraints.

The proposed model consists of an agent that moves in discretized space to shape an object with a single “stroke”, which promises topological consistency. The model requires an input voxelized geometry as one of the geometrical constraints to limit the outer figure of the output

object. An agent shapes a new path inside the input geometry through a scheme of game playing. The agent learns how to shape a new geometry while satisfying given constraints via training of the model. The agent in each step can choose a possible action amongst a given set. This action is then evaluated and the appropriate reward is given, which stimulates the

progressive learning. Although this method is applicable to any functional forms, a table is selected as a primal case to be pursued in this study.

Initial experiments were executed in 2D space as it provides an easier set up and verification for object generation. Through these experiments, useful knowledge was obtained, that led to the transition in 3D space. For instance, it was found that a local window around the agent is more effective as state description rather than the whole space, and pixel-based (i.e, voxelbased in 3D space) rewarding system is quite controllable in comparison to latent space.

A Twin Delayed Deep Deterministic Policy Gradient (Fujimoto et al, 2018) is deployed for 3D object generation, because it is one of the best performing algorithms offering continuous action space. The continuous action space enables its agent to take diverse actions. In the first trained model, an agent can move to one of its 6 direct neighboring voxels, which successfully

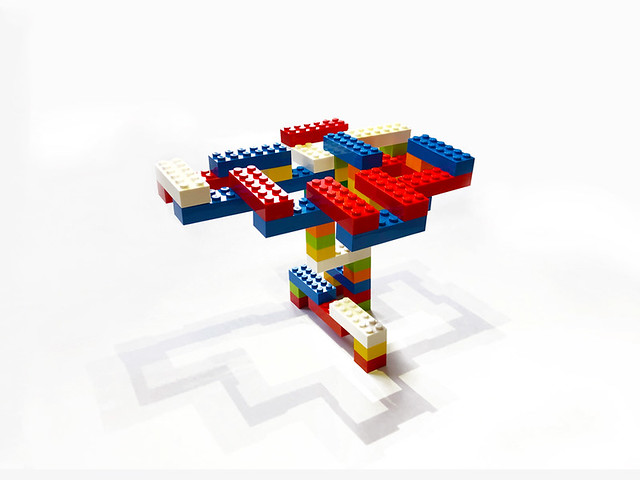

generates a series of tables in 3D space. In the second iteration, the number of possible actions are extended, meaning the agent can travel to all the voxels in a 3x3x3 neighbouring voxels. Lastly, a LEGO-fabrication-driven model is developed where an agent adds in each step a LEGO block chosen from a set of basic blocks. This fabrication scheme constrains the

design space, as the agent can fill the input geometry with limited available actions of LEGO block aggregation.

These experiments demonstrate that the proposed method of RL can generate a family of tables of unique aesthetics, satisfying topological consistency under design constraints. The model successfully fills a complicated geometry with a single stroke avoiding self-intersections

as much as possible, meaning that the agent learns motion planning available for any geometry. In addition, a diverse range of visual expressions can be obtained by differentiating the geometric interpretations of the agent’s trail, e.g. piping along the trail, smoothed meshes, or binary solidified voxels.