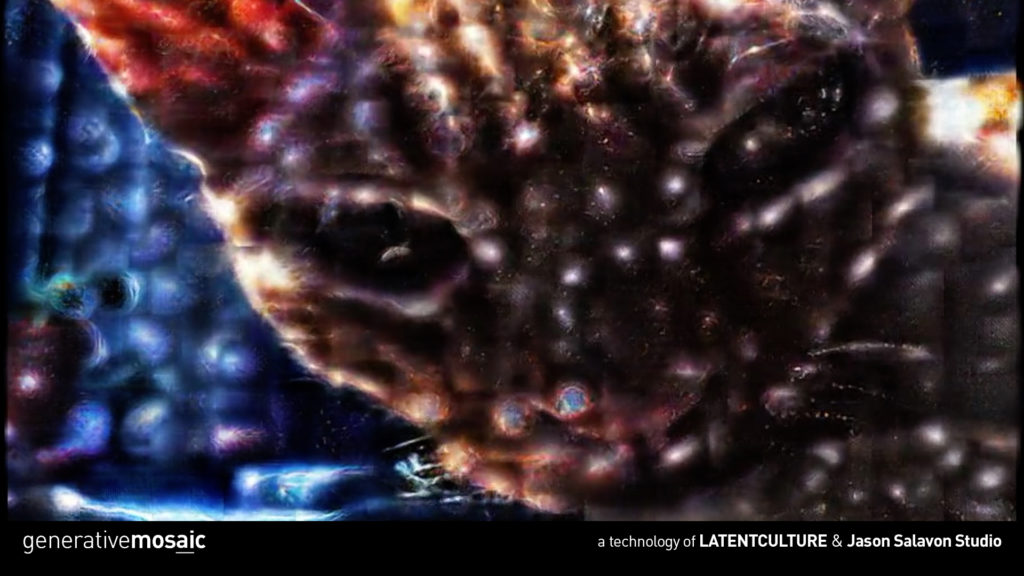

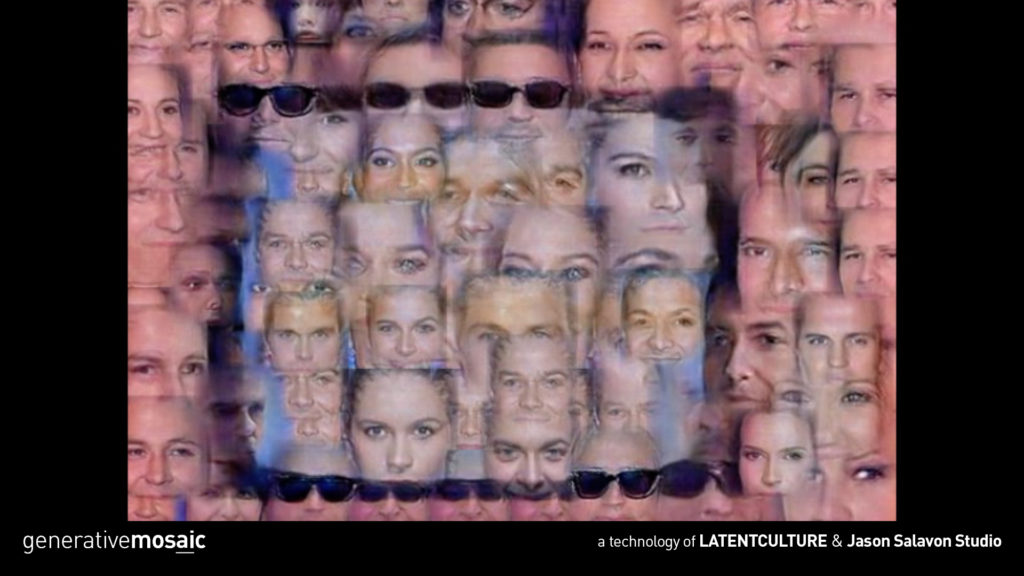

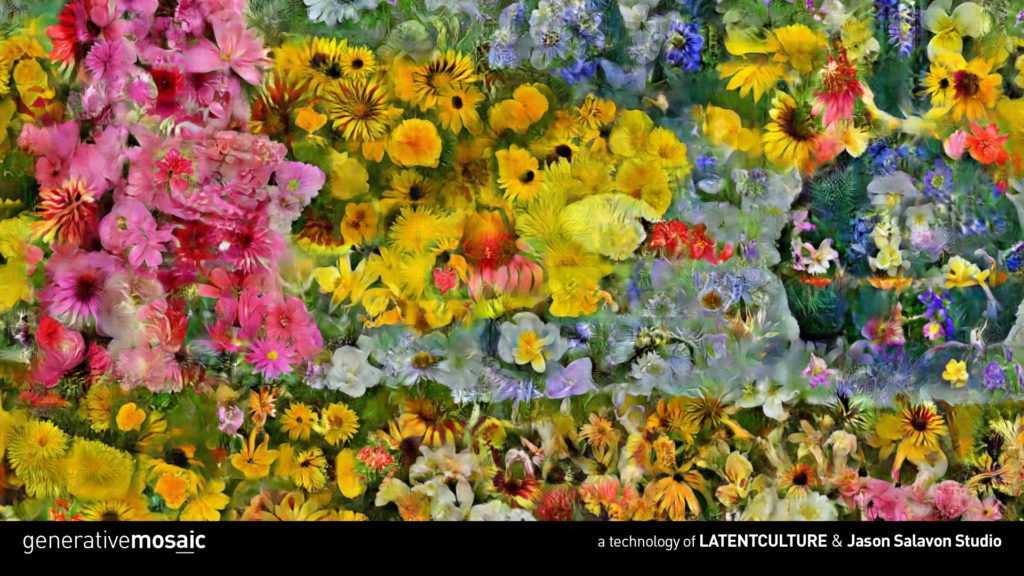

GenerativeMosaic is an application that recreates any video (or photo) input using an entirely separate set of images. You upload a video, select a dataset to render it with, and get your recreated clip back in seconds.

It is similar to Style Transfer techniques in that it is a cross-domain image manipulation tool. It differs in that it learns an entire “destination” dataset rather than sampling texture from one image. In this way, it is closer to a generative video version of photomosaics. It is also feed-forward and does not use a pre-trained classifier network. This makes it fast.

This project uses a GAN hybrid to generate cross-domain reconstructions of input video. The architecture has been developed in-house, and while we are not yet able to share the technical details, it shares similarities with methods like CycleGAN. It supports any variety of tile shape, size & position and because it is feed-forward, it is very fast. We expect real-time rendering shortly.

For over 20 years, I have made fine art through algorithmic means. You can find my work in the collections of MoMA, the Whitney, LACMA, the Art Institute of Chicago, etc. A year ago, I formed a small group to work specifically on deep learning and art. Obviously, it’s a remarkable field.

Images and Video Courtesy of Jason Salavon