Latent Pulsations (2018)

This short video shows the learning process of a VAE with two dimensional latent space trained on text data, from random initialization to final convergence. The data used was around 300k consumer complaints about 12 different financial products (e.g. “credit card”, “student loan”,…) represented by the different colors (original data taken from: https://catalog.data.

Periodic random noise was added to the embeddings to create a beating pattern that syncs with the track “2 Minds” by InsideInfo, time-stretched from the original 172bpm to 160bpm for better matching to video frame rate. I chose the track “2 Minds” because the title reminded me of the encoder-decoder relationship of the VAE, which can in a way be seen as two separate but collaborating minds playing a cooperative game (an idea I have previously written about: https://

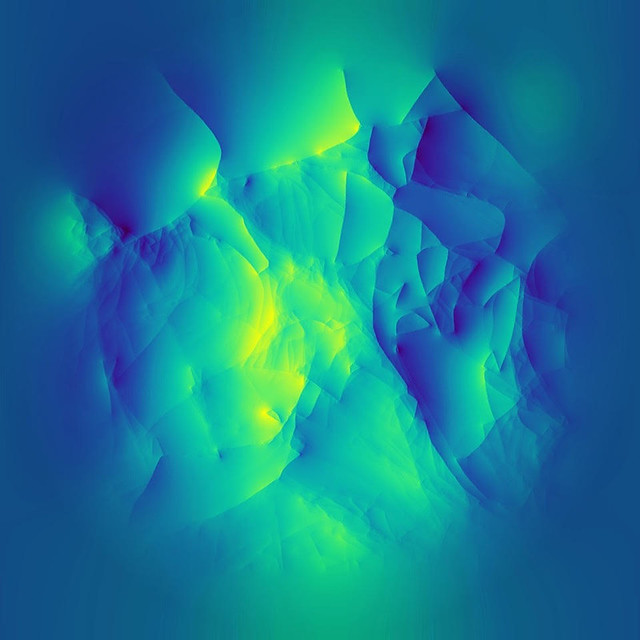

The cover artwork shows a visualization of the metric on the latent space itself (for the same model parameters and data as the video, but different training run). Imagining the magnification factor associated with the metric as a particle density distribution, and considering the resulting diffusion process, gives this visualization of initial velocities, reminiscent of an alien landscape full of sharp ridges and valleys.

Usually when creating art with generative models we think of the actual output created by the model. Here however, both the video as well as the cover art flip this notion on its head, showing that latent spaces themselves can have an inherent beauty and artistic quality, even if the data the model was trained on is decidedly dull, such as the consumer complaints texts used here.